BLOG

Challenge: AI is now making real-time decisions inside your contact centre — but who’s policing it, and how do you know it’s right?

Contact centres have always measured people: occupancy, adherence, AHT, QA scores, service levels.

But now AI is stepping into the frontline — forecasting, routing, summarising, analysing sentiment, classifying intents, recommending next best actions and even coaching agents in real time.

And here’s the uncomfortable truth no one is talking about:

We understand how to measure humans. We don’t yet understand how to measure digital decision-makers.

AI Is the Ultimate Workforce Intelligence Use Case

AI thrives in exactly the kind of environment contact centres operate in:

high volume, high variability, constant change, and endless historical data.

In fact, contact centres now sit on one of the richest sources of operational, behavioural and experience data in the enterprise — a perfect training ground for machine-led optimisation:

- Real-time feeds across voice, chat, CRM, WFM, QA

- Millions of historical interactions

- Predictable queue patterns

- Behavioural variance between agents and customers

- Sentiment, context and intent across interactions

This is exactly where AI shines:

Crunching enormous volumes of data, predicting what’s coming next, and adapting staffing, routing, coaching and priorities in real time.

But the moment AI starts making decisions, a bigger question emerges…

If AI Is Making Decisions, What Metrics Define Good Performance?

What are the AI equivalents of:

- Adherence?

- Accuracy?

- Bias?

- Escalation rates?

- Impact on customer sentiment?

- Forecasting variance?

- SLA contribution?

- Channel-switch reduction?

- Error rates that never surface in traditional QA?

And critically:

When AI makes a wrong decision, who catches it?

Today, supervisors catch human mistakes.

Tomorrow, supervisors may need to oversee algorithmic mistakes.

This flips the WFM and QA model on its head.

Welcome to the Era of Digital Workforce Management

Leaders must now manage two workforces:

- Human agents — trained, coached, performance-scored

- AI engines and decision models — monitored, validated, benchmarked

Which raises entirely new operational questions:

- What is an acceptable error margin for routing decisions?

- How do we detect when an AI model drifts or becomes less reliable?

- Should AI forecasting be compared to historical patterns or agent-driven outcomes?

- Who is accountable when automated decisions lead to SLA breaches?

- What constitutes competency for an AI assistant?

- How do we certify AI performance for compliance, risk and CX governance?

These are no longer theoretical — contact centres adopting AI today are already wrestling with them.

Why This Requires an Intelligence Layer — Not Just an AI Layer

AI can only be trusted if it is fed consistent, contextual, unified data.

And the performance of AI must be evaluated with the same clarity applied to human staff.

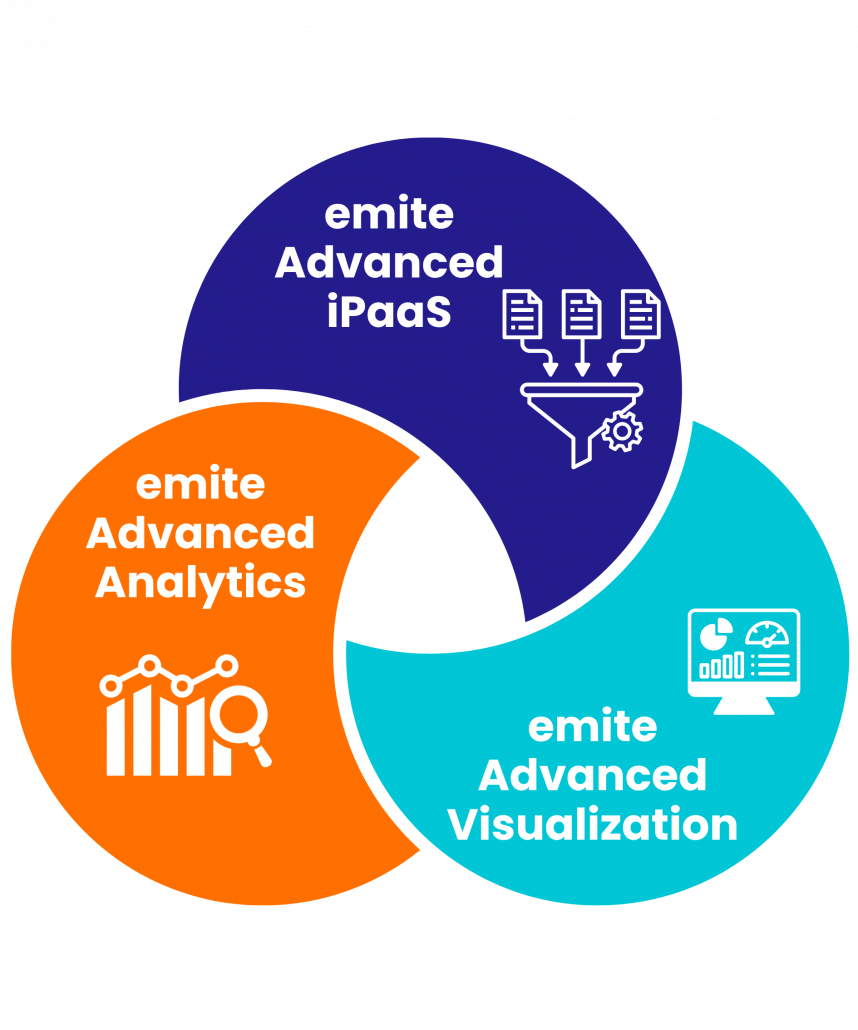

This is where emite becomes essential.

emite enables AI-ready Workforce Intelligence by:

✔ Unifying every data source (voice, chat, CRM, WFM, QA, routing engines, sentiment, back office)

✔ Providing real-time feeds that AI models need for adaptive decision-making

✔ Correlating outcomes so AI-driven decisions can be measured against actual CX impact

✔ Establishing baseline metrics for evaluating AI performance

✔ Detecting anomalies & drift when AI decisions deviate from expected patterns

✔ Visualising AI vs. human contribution to CX, SLA, and operational outcomes

✔ Giving leaders the oversight needed to govern both agent and algorithmic performance

In other words:

Before you can trust AI with decisions, you need emite to make sure those decisions are correct, measurable and accountable.

The Future Workforce Is Human + AI — But Managed With Data

AI will elevate forecasting accuracy.

AI will optimise staffing levels.

AI will adapt routing based on sentiment, context and intent.

AI will assist agents in calls and chats.

AI will help predict channel spikes and reduce queue fatigue.

But none of this works unless leaders can:

- measure AI outcomes

- govern AI behaviour

- audit AI decisions

- ensure ethical and unbiased performance

- continuously refine model accuracy

The contact centre workforce of the future isn’t just people — it’s people augmented by AI.

And both require structured performance management.